This afternoon, I’ll be at MIT for this conference, sponsored by the Berkman Center for Internet and Society at Harvard and the MIT Center for Civic Media and entitled “Truthiness in Digital Media: A symposium that seeks to address propaganda and misinformation in the new media ecosystem.” Yesterday was the scholarly and intellectual part of the conference, where a variety of presenters (including yours truly) discussed the problem of online misinformation on topics ranging from climate change to healthcare—and learned about some whizzbang potential solutions that some tech folks have already come up with. And now today is the “hack day” where, as MIT’s Ethan Zuckerman put it, the programmers and designers will try to think of ways to “tackle tractable problems with small experiments.”

In his talk yesterday, Zuckerman quoted a helpful—if frankly, somewhat jarring—analogy for thinking about political and scientific misinformation. It’s one that has been used before in this context: You can think of the dissemination of misinformation as someone akin to someone being shot. Once the bullet has been fired and the victim hit, you can try to run to the rescue and stanch the bleeding—by correcting the “facts,” usually several days later. But, psychology tells us that that approach has limited use–and to continue the analogy, it might be a lot better to try to secure a flak jacket for future victims.

Or, better still, stop people from shooting. (I’m paraphrasing Zuckerman here; I did not take exact notes.)

From an MIT engineer’s perspective, Zuckerman noted, the key question is: Where is the “tractable problem” in this, uh, shootout, and what kind of “small experiments” might help us to address it? Do we reach the victim sooner? Is a flak jacket feasible? And so on.

The experimenters have already begun attacking this design problem: I was fascinated yesterday by a number of canny widgets and technologies that folks have come up with to try to defeat all manner of truthiness.

I must admit, though, that I’m still not sure that their approaches can ultimately “scale” with the kind of mega-conundrum we’re dealing with—a problem that ultimately may or may not be tractable. Still, my hat is off to these folks, and the enthusiasm I detected yesterday was impressive.

Some examples:

* Gilad Lotan, VP of R&D for Social Flow, has crunched the data on falsehoods and, um, truthoods that trend on Twitter. He’s studied which lies persist, which die quickly, which never catch fire—and why. To stop falsehoods in their tracks, he advocates a “hybrid” approach to monitoring Twitter lies–combining the efforts of man and machine. “We can use algorithmic methods to quickly identify and track emerging events,” he writes. “Model specific keywords that tend to show up around breaking news events (think “bomb”, “death”) and identify deviations from the norm. At the same time, it’s important to have humans constantly verifying information sources, part based on intuition, and part by activating their networks.”

* Computer scientist Panagiotis Metaxas, of Wellesley College, has figured out a way to detect “Twitter bombs.” For instance, during the 2010 Senate race in Massachusetts between Scott Brown and Martha Coakley, Metaxas and his colleague Eni Mustafaraj found that a conservative group had “apparently set up nine accounts that sent 929 tweets over the course of about two hours….Those messages would have reached about 60,000 people.” Alas, the Twitter bomb was only detected after the election, once Metaxas and Mustafaraj crunched the data on 185,000 Tweets.

* Tim Hwang, of the Pacific Social Architecting Corporation, introduced us to bot-ology: How people are creating programs that manipulate Twitter and even try to infiltrate social networks and movements. Hwang talked about, essentially, designing countermeasures: Bots that can “out” other bots—and even serve virtuous purposes. “There’s a lot of potential for a lot of evil here,” he told The Atlantic. “But there’s also a lot of potential for a lot of good.”

* Paul Resnick, of the University of Michigan, discussed the beta-mode tool Fact Spreaders, an app that automatically finds Tweets that contain falsehoods and connects users to the relevant fact-check rebuttals–so they can rapidly tweet them at the misinformers (and misinformed). It seems to me that if something like this catches on widely, it could be powerful indeed.

This is just a tiny sampling of the truth gadgets that people are coming up with. Ethan Siegel, a science blogger who was not at the conference (but should have been), is now working for Trap!t, an aggregator that is “trained” to find reliable news and content, and screen out bad information.

So…okay. I am very impressed by all of this wizardry, and am glad to share news of these efforts here. But now let’s ask the key question: Can it scale? Can it really make a difference?

Look: If Google were to suddenly do something about factually misleading sites showing up when you, say, search for “morning after pill” (see the fourth hit), there’s no doubt it would make a big difference. But as Eszter Hargittai of Northwestern put it in her talk yesterday (which highlighted the “morning after pill” example), Google doesn’t seem to be taking on this role. And none of us have anything remotely like the sway of Google.

Short of that, what can these kinds of efforts accomplish?

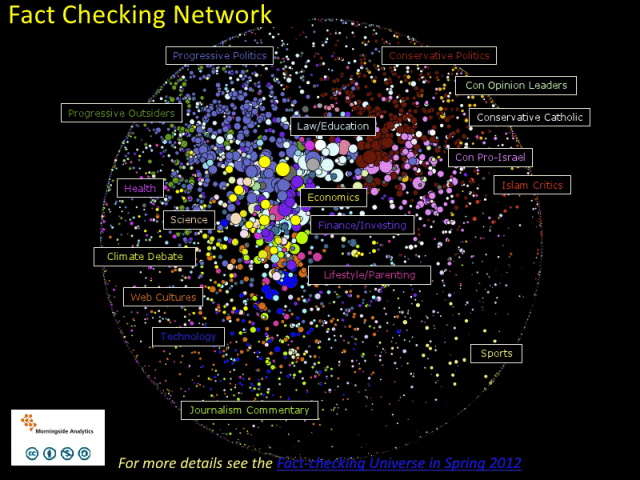

I heard a lot of impressive stuff yesterday. But what I didn’t hear—not yet anyway—was an idea that seems capable of getting past the vast and potentially “intractable” problem of information-stream fragmentation along ideological lines. The problem, I think, is captured powerfully in this image from a recent New America Foundation report on “The Fact-Checking Universe”; the image itself was originally created by a firm called Morningside Analytics.

What the image shows is an “attentive cluster” analysis of blogs that are interested in the topic of fact-checking—e.g., reality. Blogs that link to similar sites are grouped together in bubbles—or closer to each other–and the whole group of bubbles is organized on a left-to-right political dimension.

The image shows that although both profess to care about “facts,” progressive and conservative blogs tend to link to radically different things—e.g., to construct different realities. And that’s not all. “A striking feature of the map,” write the New America folks, “is that the mainstream progressive cluster is woven into [a] wider interest structure [of blogs that are interested in economics, law, taxes, policy, and so on], while political discourse on the right is both denser and more isolated.” In other words, conservatives interested in fact-checking are linking to their own “truths,” their own alternative “fact-checking” sites like NewsBusters.org.

What I haven’t yet heard are ideas that seem capable of breaking into hermetically sealed misinformation environments, where an endless cycle of falsehoods churns and churns–where global warming is still a hoax, and President Obama is still a Muslim, born in Kenya, and the health care bill still creates “death panels.”

Nor, for that matter, have I yet heard of a tech innovation that seems fully attuned to the psychological research that I discussed yesterday, along with Brendan Nyhan of Dartmouth. For a primer, see here for my Mother Jones piece on motivated reasoning, and here for my Salon.com piece on the “smart idiot” effect—both are previews of my new book The Republican Brain. And see Nyhan’s research, which I report on in some detail in the book.

What all of this research shows—very dismayingly—is that many people do not really want the truth. They sometimes double down on wrong beliefs after being corrected, and become more wrong and harder to sway as they become more knowledgeable about a subject, or more highly educated.

Facts alone—or, the rapid fire Tweeting of fact-checks—will not suffice to change minds like these. Ultimately, the psychology research says that you move people not so much through factual rebuttals as through emotional appeals that resonate with their core values. These, in turn, shape how people receive facts—how they weave them into a narrative that imparts a sense of identity, belonging, and security.

Stephen Colbert himself, when he coined the word “truthiness,” seemed to understand this, talking about the emotional appeal of falsehoods:

Truthiness is ‘What I say is right, and [nothing] anyone else says could possibly be true.’ It’s not only that I feel it to be true, but that I feel it to be true. There’s not only an emotional quality, but there’s a selfish quality.

As I said in my talk yesterday, there is now a “Science of Truthiness”—that was very nearly the title of my next book, though Republican Brain is better—and it pretty much confirms exactly what Colbert said.

So unless you get the psychological and emotional piece of the truthiness puzzle right, it seems to me, you’re not really going to be able to change the minds of human beings, no matter how cool your technology.

Therefore–and ignoring for a moment whether I am sticking with “tractable” problems or not–I think these tech forays into combating misinformation are currently falling behind in three areas:

1. Speed. This one the programmers and designers seem most aware of. You have to be right there in real time correcting falsehoods, before they get loose into the information ecosystem—before the victim is shot. This is extremely difficult to pull off—and while I suspect progress will be made, it will be hard to really keep up with all the misinformation being spewed in real time. At most, we might find that the best that’s possible is a stalemate in the misinformation arms race.

2. Selective Exposure. You’ve got to find ways to break into networks where you aren’t really wanted—like the alternative “fact” universe that conservatives have created for themselves. This is going to mean appealing to the values of a conservative—perhaps even talking like one. But….that sounds very bot-like, does it not? Unless moderate conservative and moderate religious messengers can be mobilized to make inroads into this community–again, operating at rapid-fire.

3. We Can’t Handle The Truth. Most important, human nature itself stands in the way of these efforts. I’m still waiting for the killer apps that really seems to reflect a deep understanding of how we human beings are, er, wired. We cling to beliefs, and if our core beliefs are refuted, we don’t just give them up—we double down. We come up with new reasons for why they are true.

Please understand: I have no intention of raining on this parade. I’m actually feeling more optimism than I’ve felt in a long time. It’s infectious and inspiring to see brilliant people trying to take on and address discrete chunks of the misinformation problem—a problem that has consumed me for over a decade—and to do so by bringing new ideas to bear. To do so scientifically.

Still, to really get somewhere, we’ve really got to wrap our heads around 1, 2, and 3 above. That’s what I’m going to tell them at the “hack day” today—and the great thing is that unlike some of the people we’re trying to reach, I know this crowd is very open to new ideas, and new information.

So here’s to finding out what actually works in our quest to make the world less “truthy”–one app at a time.

Subscribe to our newsletter

Stay up to date with DeSmog news and alerts